AI might be the only way test automation engineers can keep up with the complexity of today's software. That's just one of the takeways shared by experts at last week's Triangle Information Systems Quality Association (TISQA) conference, held in North Carolina's research triangle.

TISQA is a volunteer software testing organization that recently hosted this 470-attendee get-together. Attendees said they actually people prefer the smaller, local atmosphere over the sometimes bloated, marketing-heavy, big-name conferences. Local conferences tend to offer a more practical focus and more relevant networking opportunities, they said. Although some small conferences have trouble drawing popular speakers, TISQA does not.

Topics focused in on testing concerns, with tracks on testing techniques, test management and metrics, test automation, mobile and performance testing, and some DevOps topics.

Here's a rundown of key takeaways for test engineers on subjects ranging from the latest service testing techniques to test automation, consumer-driven contract testing, and the compelling need for AI in testing.

Mobile testing strategy: Just the data-driven answers please

Jason Arbon, who has worked as an engineer and tester at Microsoft and Google, is now CEO and co-founder of the software testing firm Test.ai. His experience with top companies in the software industry made for an interesting presentation—especially toward the end.

Arbon started by sharing data he gathered from the Apple and Google mobile app stores—and the mobile strategy advice that he gleaned from those stats. Some of the more interesting tidbits he shared included:

- For game apps, just focus on making a good game. As long as the mechanics are fun, most people won’t care about stability, elegance, or security issues.

- For more utilitarian apps—like a finance app—usability is the number one concern. Stability is still not a big one as long as the app is useful.

- Many aspects of app performance are out of your control, so notify your users in the app if they’re taking a performance hit due to network connectivity or something else outside of your control.

- When you develop features for your app, read competitor app reviews and see what things they get criticized for. Then focus on making those things better than your competition.

- According to an analysis of thousands of apps, the average review doesn’t change based on an app shipping frequently or infrequently. So if you don’t think you need to ship a new version every two weeks, don’t.

Arbon asserted that it’s okay to release rarely once your app has gained a following. One app he was involved with has been in the top 30 range in the Apple App Store for years. At first they were shipping updates all the time, but as soon as their average review was high, they shifted to an extremely infrequent release schedule. Why? Because it was too risky to make significant changes when people already liked it.

He also recounted how after Starbucks made a significant update to its app, it was inundated with bad reviews that brought its star rating crashing down. And when management saw the reviews they fired the entire test team. Whether that move was good or bad was beside the point. As engineers and testers you need to think about more than just making sure your app works.

Is AI testing’s last, best hope?

The final segment of Arbon’s talk focused on the futility of current test automation practices. He described what a lot of testers in the room had already experienced—the common stages of a large test automation effort that typically start with optimism, end with defeat, and begin again with optimism, believing that the new team would overcome the challenges that the last team faced.

“Everyone fails at test automation. Even Google and Microsoft fail at this over and over again. It doesn’t matter if you have top talent. It doesn’t matter if you built many of the tools in-house.”

—Jason Arbon

At this point, Arbon apologized for bumming everyone out in the last session of the day.

Arbon couldn’t stress the challenges of test automation enough, saying that he agrees with a tester who once told him: “Your best engineers should be doing your test automation.”

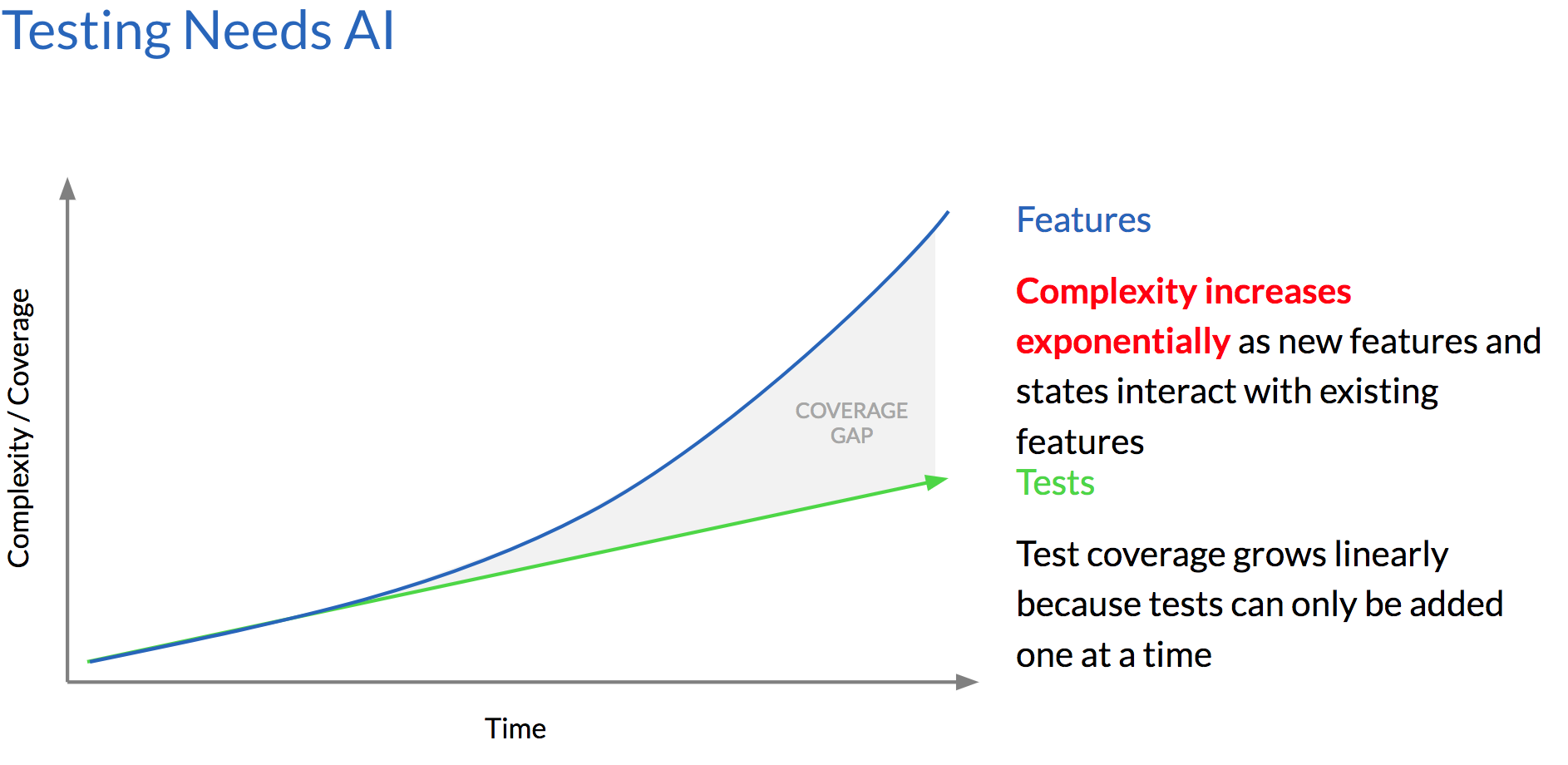

Test automation challenges are further amplified in mobile testing, he says, because of the larger number of variables and environments involved versus simple web apps. Cloud testing and cross-platform testing tools still don’t solve enough of the problem. And generally, he said, the complexity that developers add to the app will always outpace test development, as the graph below illustrates:

Even though everyone in the room knew that Arbon sells a product that uses AI/machine learning to test, a fact that he disclosed up front, many people in the audience were convinced that he's right. Development has changed considerably over the past few years, but testing strategies, techniques, and technologies haven’t had a major revolution in over a decade, Arbon said.

He sees AI as a necessary revolution for test automation. Bots need to be able to quickly handle the litany of simple new test cases as app complexity grows exponentially. What his team has done so far involves feeding every app in the app store to a neural network so that it can recognize elements like a login field and a hamburger menu on an app that it’s never seen before. Right now their tool can construct functional test cases as long as the app doesn’t have complicated gestures (Snapchat would be an example of an app that has gestures that are too complicated).

Many companies are working on this problem, and the payoff for testers could be enormous. Test step sequences change all the time, and element selection can be time-consuming. Some teams have trouble maintaining just 20 of these tests. If AI could handle these updates in a matter of seconds, we’d all have a lot more stable apps.

Forget UT test automation: Service-level tests rock

Paul Merrill, a testing consultant at Beaufort Fairmont and an organizer of the conference, had a great interactive session that showed beginners in API/service testing how to get started.

Just a few minutes into the session, Merrill found an audience member who pointed out the worst part of UI test automation: flaky tests. Tests that are “green in the morning and red in the afternoon,” the attendee said.

Not only are UI tests brittle, Merrill said, but testers often overuse them because they mistakenly assume that a UI test flow is exactly the same as a human performing those actions. A better strategy, he says, is to use service-level tests wherever possible.

“If you can test it without accessing the UI, do it.”

—Paul Merrill

Do you have the right tools and strategies for testing APIs?

First, Merrill showed the audience how to manually test an API—something you might want to do in an exploratory test session before you decide which tests to automate. APIs usually send data in HTTP or JSON formats.

The first thing to do, Merrill suggested, is to search for a browser plugin like JSONView if you use Chrome. Tools like JSONView are helpful because they put the message data (in this case JSON) in a human-readable format. From there Merrill used Ctrl+F to manually confirm that specific strings or values were in a message.

Although some testers weren’t comfortable in a command line interface, it gives testers more power to manually or automatically check API messages or filesystem exchanges, especially with the curl command. There are tools that can help tie these tests into the command line, which is important since the command line’s best biggest benefit is connecting all of your tests to a continuous integration (CI) engine like Jenkins.

When you’re ready to automate your API tests, you'll find great open source and commercial tools that can help, including:

- Advanced REST Client

- Spock

- SOAPUI

- Ready API (commercial)

- Postman (the tool Merrill used for his demos)

- REST Assured

Postman and other tools have built-in test cases, so getting started with building automation isn’t very hard, Merill said. For hooking into Jenkins with Postman, use Newman, which runs Postman at the command line. Here’s a tutorial on how to do that.

The presentation portion of the session ended with an API testing demo in Java (code for the demo is available on Merrill’s GitHub account). He also suggested testers check out Petstore.swagger.io as a teaching tool for APIs, as well as using the Swagger.io platform and setting up a Swagger site (Swagger removes the guesswork in calling a service).

How to overcome authentication, test data management and other thorny service test automation issues

Merrill used the last third of his time to ask people what problems they were running into with service test automation. Authentication was a big issue. Some lower test environments may not have the same authentication as the upper ones.

Environments turned out to be a common cause of flaky tests, as more attendees chimed in. Those environments could often cause the timing between the application and the test to be off, causing failures.

Test data management was another key issue. Many tests fail because data changes and you run into race conditions, Merrill says. Service virtualization can help, but since these are commercial services, you need to be smart about turning the service on and off so you don’t overspend. Here’s a demo video of service virtualization in action.

One other issue was communication between testers and developers. Test-driven design (TDD) is a good way to create conversations, Merrill said, since there’s some guesswork in building tests and using mocks before the actual code is written. He suggested checking out mockable.io and Wiremock, adding that many tools are available to address all of your mocking needs.

One attendee brought up what Merrill called “Defining the interface,” where testers create documented attribute conventions that developers add into the code so test engineers can start testing early. You will need to do a good job of sharing your documentation and getting the information you need from developers during sprint planning if you want this to work, Merrill says.

Merrill recommended several helpful articles. Here are links to just a few:

- What is an API? In English, please.

- What is an API?

- Send the First Request - Postman Blog

- HTTP Methods

- The Forgotten Layer of the Test Automation Pyramid

And general information about RESTful API design, check out A guide to RESTful API design: 35+ must-reads, by Bill Doerrfeld.

The big question: Which tests should you automate?

This is always one of the biggest questions testers face. You know it’s good to let computers do the heavy lifting by automating your tests. But you can’t automate a test for every potential bug, and excessive automation can get expensive. More automated tests means more that you have to maintain, and they can slow down the development lifecycle.

Also, redundant automation is noisy. You might get 50 test failures even though you didn’t actually break 50 things—these errors get triggered because a lot of your tests share a similar path through the software.

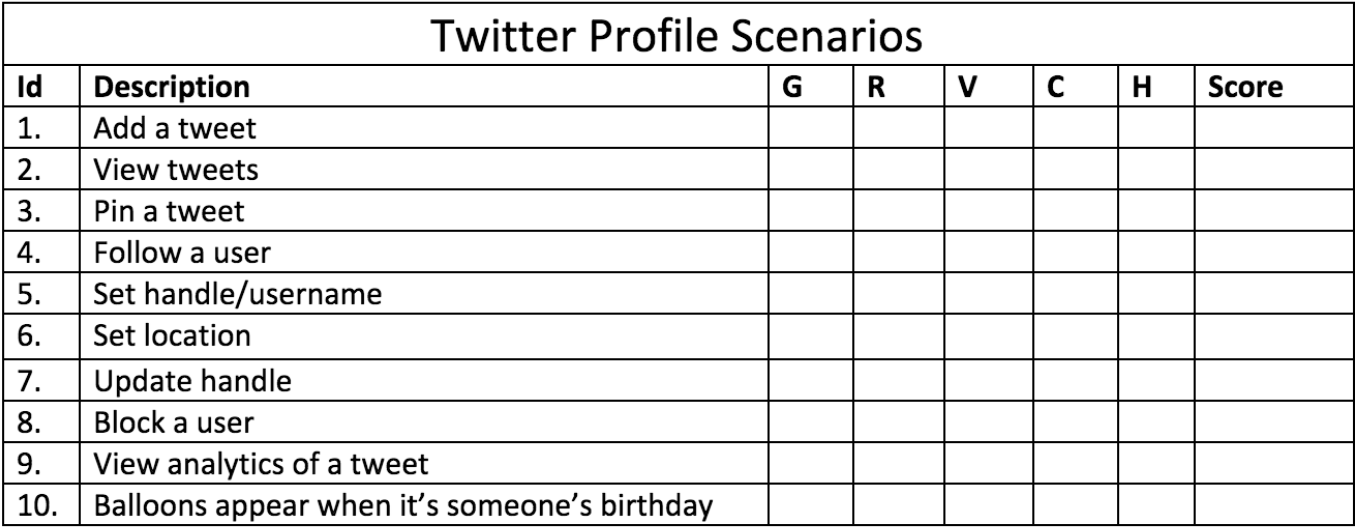

That’s the premise Angie Jones, a senior software engineer in test at Twitter, started with during her presentation. To help testers make decisions about what to automate, Jones shared the model she uses at Twitter. For the rest of the session, attendees used this simple model to decide whether or not certain actions on Twitter should have automated tests.

These are the four metrics she uses to make a decision on automation:

- Risk - How frequently is this feature going to be used by customers? vs. What is the impact for most customers if this is broken?

- Value - Does this test provide new information or is it redundant with a lot of other tests? vs. How quickly would this failure be addressed once it's in the bug tracker? (Testers often don’t realize that things aren’t as distinct as they seem. For example, the tests created for testing a tweet versus a tweet with an emoji wouldn’t be very different.)

- Cost efficiency - How quickly could you script an automated test? vs. How easy would it be to script an automated test? (Note: for this exercise, tests taking less time should have a higher score, not a lower one, as intuition might tell you.)

- History – How high is the volume of failures in this feature’s area of the codebase, historically? vs. How high is the volume of failures for this test, historically?

Testers give a score of one to five for each of the two dimensions of the four metrics above. So the score can range from 1 to 25 (remember, the time measurement for cost efficiency should not have a lower score for shorter times). Then the scores are added up for each test. Jones handed out a worksheet that looked like this:

The “G” column stands for “gut check,” which was very useful because it showed how wrong our intuition can be when it comes to test automation. Here was what each range of scores meant in Jones’ model:

- 75 - 100: Automate

- 26 - 74: Possibly automate

- 4 - 25: Don't automate

The scores also tell you how you should prioritize the automation of your tests—a higher score means you should automate it sooner. Jones shared another worksheet that you can use to implement this model within your own team. The spreadsheet was adapted from Dorothy Graham: What to Automate Worksheet.

Consumer-driven contract testing: Have you considered PACT?

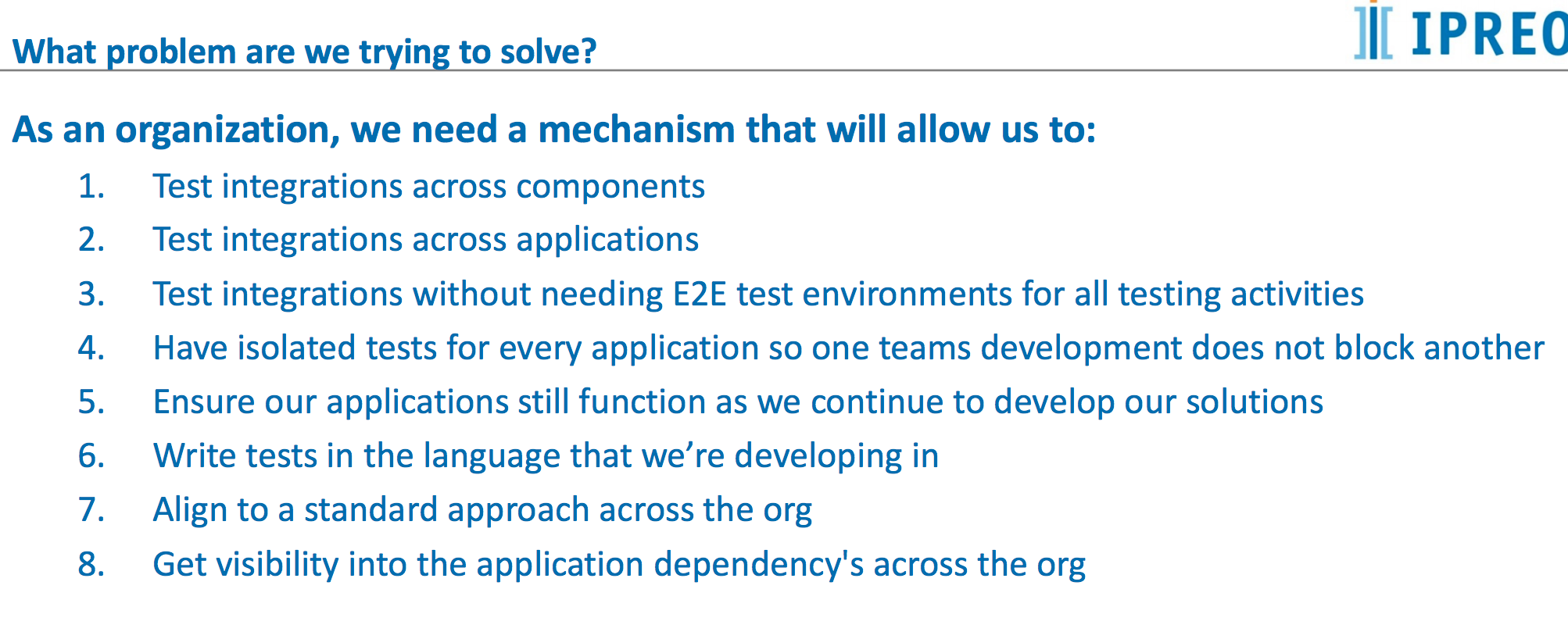

Consumer-driven contract (CDC) testing will become more important as more organizations deal with the unique complexities of microservices, said Ron Kuist, a QA manager at Ipreo. His talk focused on the open source tool PACT as a solution for contract testing, but he mentioned other frameworks as well, such as Spring Cloud Contract.

Kuist described CDC testing as a method for testing pieces of an architecture such as two parties making a legal contract. The test fails if the consumer (client) or the provider (API) doesn't fulfill their obligation as stated in the test. In a way, the users of your APIs write tests for you when using CDC testing. PACT gathers data that helps you understand the usage of the API so your development can follow where customers lead and you can build tests according to customer usage.

This slide shows the types of problems CDC testing is equipped to solve:

CDC testing is not meant to replace all of your end-to-end tests or functional tests. These tools are meant to be used on isolated unit tests, he said.

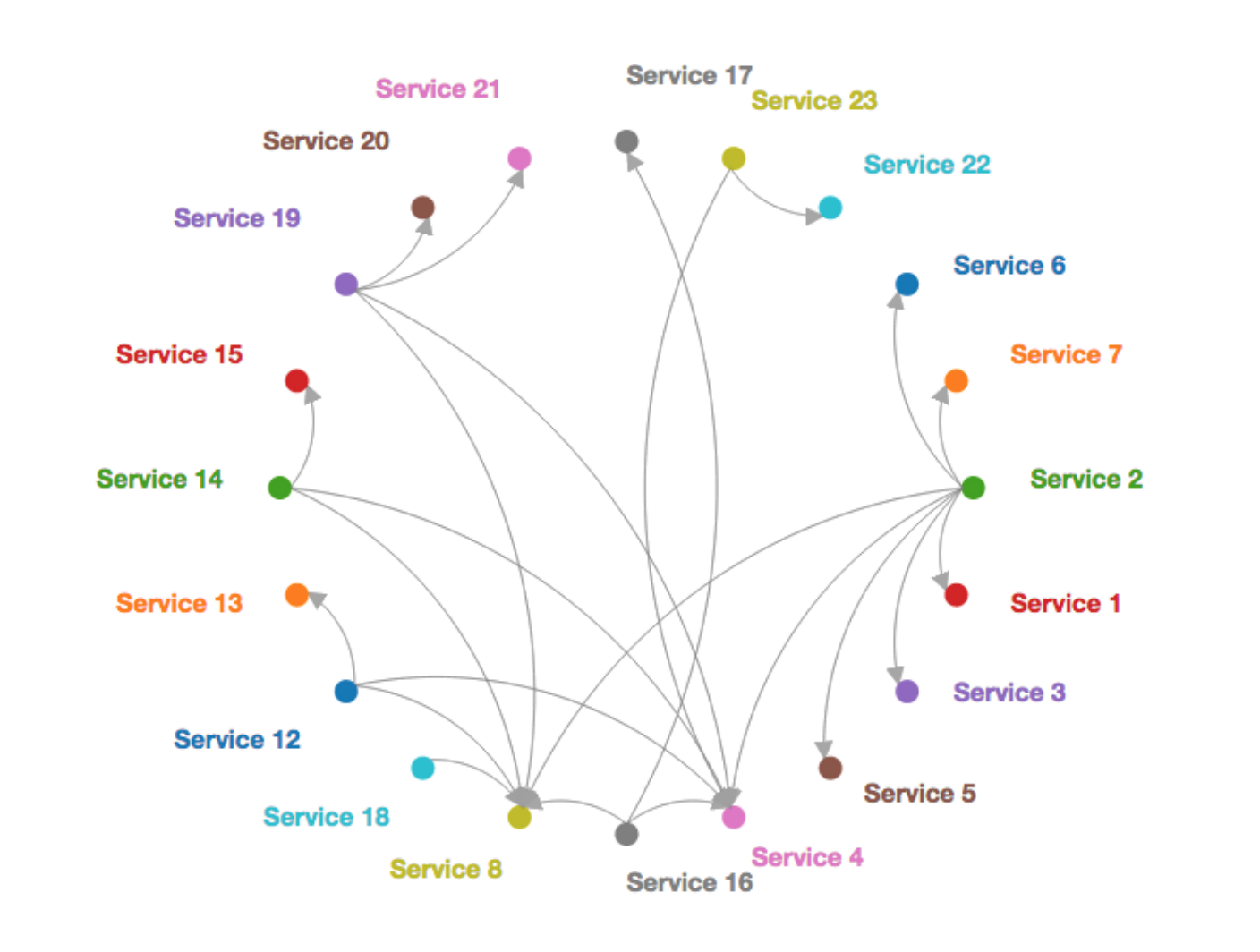

Tools like PACT are good at solving communication problems. Below is a screenshot of a full-organizational dependency graph from PACT broker. This graph can be helpful—especially in a microservices environment, Kuist said:

PACT is helpful in communicating the acceptance testing process to business people as well, much like acceptance test-driven development (ATDD) or behavior-driven development (BDD), but Kuist says it’s not quite the same thing.

The presentation concluded with a demo and the resources below. The first article on this list, from Martin Fowler's blog, is an excellent deep-dive into the definition and details of consumer-driven contracts.

- Consumer-Driven Contracts: A Service Evolution Pattern

- Consumer Driven Contract Testing with Pact

- Sharing Consumer-Driven Contracts with Pact Broker

Get out there and network

Those were just a few of the takeaways from TISQA, and you'll learn even more if you attend conferences like this in person. Whether you're looking for the networking benefits of smaller, regional testing conferences or you want to hit some of the larger ones, check out our list of the top testing conferences in 2018.

Image credit: Flickr

Keep learning

Take a deep dive into the state of quality with TechBeacon's Guide. Plus: Download the free World Quality Report 2022-23.

Put performance engineering into practice with these top 10 performance engineering techniques that work.

Find to tools you need with TechBeacon's Buyer's Guide for Selecting Software Test Automation Tools.

Discover best practices for reducing software defects with TechBeacon's Guide.

- Take your testing career to the next level. TechBeacon's Careers Topic Center provides expert advice to prepare you for your next move.