Agile methods have been around for a decade and a half, but most mid-size and large organizations are only now going through the transformation and adoption process. They’ve heard about DevOps, why they need erformance engineering, the Scaled Agile Framework, and other methods, and they’re eager to get started. But in the move to agile, results are paramount—no one will care what methods you use if your technology performs poorly or fails altogether.

Too often, organizations make time for performance testing only as an afterthought. The work that’s needed comes too late in the game to improve results for the customer or end user.

So how soon should performance issues be addressed? You should start in the earliest phase, when features and functions are first being planned and described. Here's how you can incorporate performance engineering into your user stories, so that each sprint can deliver quality that goes far beyond the functional requirements.

Performance at the user-story level

How do your user stories get described? Mike Cohn and others who write about agile development have long advocated use of an index card, or maybe a sticky note, for capturing user stories—the idea being that if you can’t fit you user story on a 3-by-5 index card, you haven’t honed your story down to its essentials yet.

Writing a good “story” in agile development involves things as simple as defining who the actors are and how they will interact to get expected results. But for performance engineering, there are additional considerations.

When teams start breaking down epics into stories, they also need to think about the acceptance test criteria. It’s just as important, which is why I believe you should use the back of the card also, to capture performance acceptance criteria. That needs to become an essential part of the user story that is being elaborated, estimated, prioritized, and committed to in a backlog.

This is what performance engineers mean when they talk about “building performance in” at a very early stage. As you’re working with a business person, an analyst, or the end user, the acceptance test criteria you’ve captured show them that you’re implementing the story correctly, including the things that it must do, and meeting (or exceeding) the performance expectations of the end user. This is what’s missing today in the way requirements are understood and managed, and that represents a huge gap in effective performance engineering practices throughout nearly all organizations.

Let me show you how I try to fix that problem.

3 ‘building performance in’ examples

A user story helps you to think about who (in whatever role or capacity) needs to perform what activities in order to get a certain result. From a functional perspective, this user story template also gives you a rudimentary framework for building in the performance considerations.

For example, if you’re designing an online game, performance considerations include: “How many people concurrently will be entering a new name and optional description?” “How many people will be inviting others,” and “What types of devices will they be using? What are the network conditions, and in what distributed world geographies?”

The three examples that follow illustrate this technique.

Example 1: Vehicle remote start … or not!

On a cold day in Detroit, I visited the CTO of a major auto manufacturer, who was upset with the auto-start feature his company had installed on new cars. “Watch,” he said. With his mobile phone he launched the app and tapped an icon to remote-start his car. “Now follow me,” he said. We proceeded down the hallway, down three stories in the elevator, then about 200 yards to where his car was parked. The trip from his office to his vehicle took exactly 12 minutes. I timed it.

When we got to his car, his vehicle wasn’t running. “Okay, just wait…” he said. We stood in the cold for another few minutes, and—the engine turned over, just as demanded from his mobile app. Only the button had been pressed 14 to 15 minutes earlier! “I’m afraid this is our customers’ everyday reality,” he said.

By some metrics, this unacceptable delay wasn’t the fault of the software itself. It had actually been well designed, according to the user story that I’ve captured below.

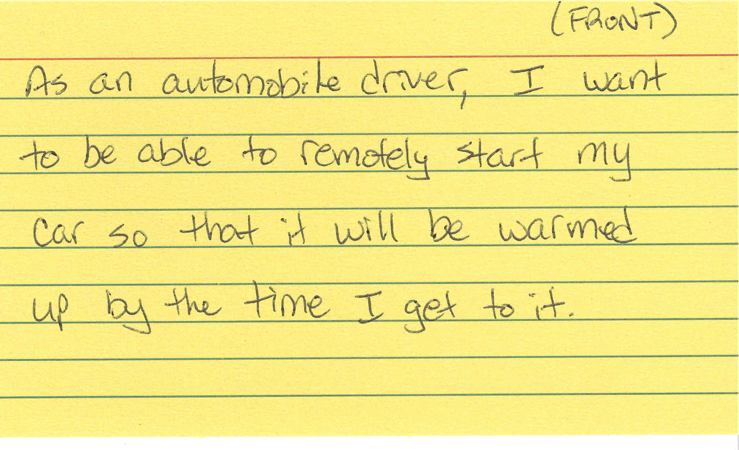

The user story reads: "As an automobile driver, I want to be able to remotely start my car so that it will be warmed up by the time I get to it."

Okay, but this user-story description captures several very subjective ideas. What does “by the time I get to it” mean? Does it mean “user should walk two to three minutes more slowly than usual” to ensure that the vehicle is on when he arrives? Or does it mean “user should press auto-start 15 minutes prior to arrival” to ensure that the vehicle is actually “warmed up” upon user arrival? Of course not. Most users would expect a car to start within five seconds so that it would be warming for at least five or ten minutes before they’re ready to drive.

What if you captured those subjective, human requirements that describe the desired outcome on the back of the same user story card? Here’s what that might look like:

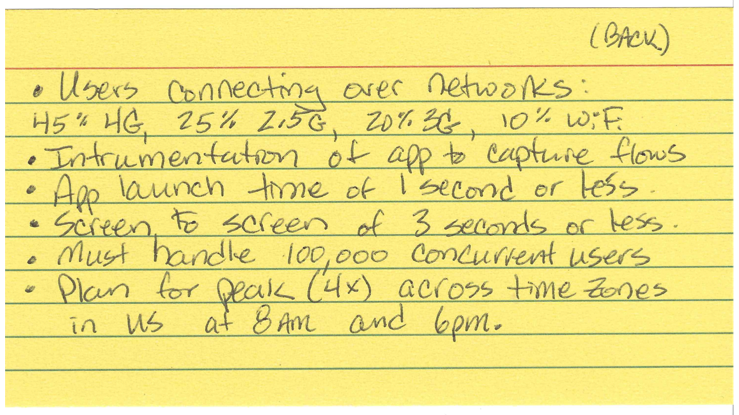

The back of the card reads:

- Users connective over networks: 45% 4G, 25% 2.5G, 20% 3G, 10% WiFi

- Instrumentation of app to capture flows

- App launch time of 1 second or less

- Must handle 100,000 concurrent users

- Plan for peak (4x) across time zones in US at 8 am and 6 pm

That afternoon, as we hustled back into the warmth of his office, he asked, “So, how do we fix that app?” I started explaining how to capture performance-related acceptance criteria early in the development cycle, and I sketched out something close to what you see above on his whiteboard. Next thing you know, I had a team of six tech leads sitting in front of me.

Performance focus: Get the team to understand the impact on your users

His team has now adopted several new performance engineering practices, and that particular glitch was fixed. More important, user satisfaction improved, which shows that software continues to be a competitive differentiator for auto manufacturers.

Here are some of those new performance-related practices:

- Use both front and back of a user story card to capture 1) the standard "who," "what," and "so what?" as well as 2) the performance acceptance criteria.

- Retool the organization’s “definition of done” to include both front- and back-of-card details.

- Leverage capabilities to gather automated performance results from the initial build and automatically run these with deploys into the first environments.

- Establish performance accountability and ownership by all stakeholders of a user story, factored in from the beginning.

The adoption of these capabilities did not require a large purchase of software or hardware, but rather a change in thinking and focus, along with some minor adjustments in the culture of the organization. I believe many organizations can improve software delivery along the same lines, by increasing the team’s focus on the end user and sharing success in feedback and deployment of products and services.

Example 2: Will you be on time for your next airline departure?

Most of us have been there: We know the time of our flight, how long it takes to get to the airport, the transportation method we’ll use, etc. But when we’re on the move, we need to rely on mobile devices to stay updated regarding actual wheels-up time. Weather and other circumstances can cause last-minute delays, and waiting in an airport can be a productivity killer.

But if you can receive reliable, up-to-the-minute flight status on your mobile device, you’d have far more control than you’re allowed inside today’s highly secure, highly regulated airport facilities.

Here’s a simple user story for this need.

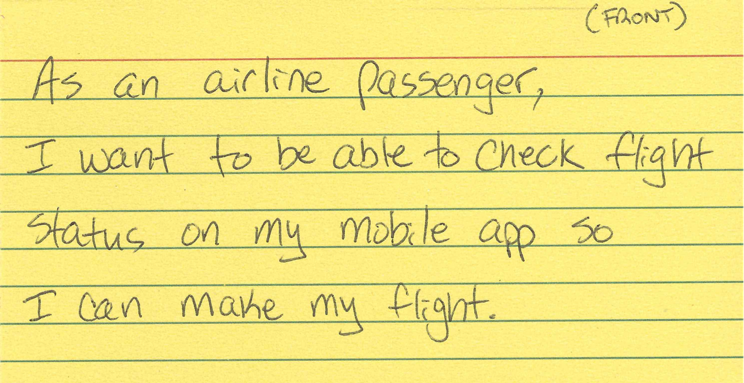

The user story reads: I want to be able to check flight status on my mobile app so I can make my flight.

Sounds reasonable, right? This is what most airlines proclaim as the value of these apps, and when everything is working as scheduled, the apps tend to be worthwhile. But when things go wrong—say, when one flight is delayed and causes further delays for a beehive of interconnecting flights—these apps are frequently inaccurate or altogether wrong. A huge part of the problem is the massive influx of frustrated customers, all hitting the app at the same time to learn the status of their flights.

Airline apps are useless if they can’t handle the changes that travel entails. Without good information, changes can lead to overwhelmed gate agents, increased call volumes, missed connections, and angry passengers questioning their reasons for flying in the first place. If the app could only work as designed, results would be different, and outcomes for the end users would be more positive.

Here’s what I suggest for the back of the card for this story.

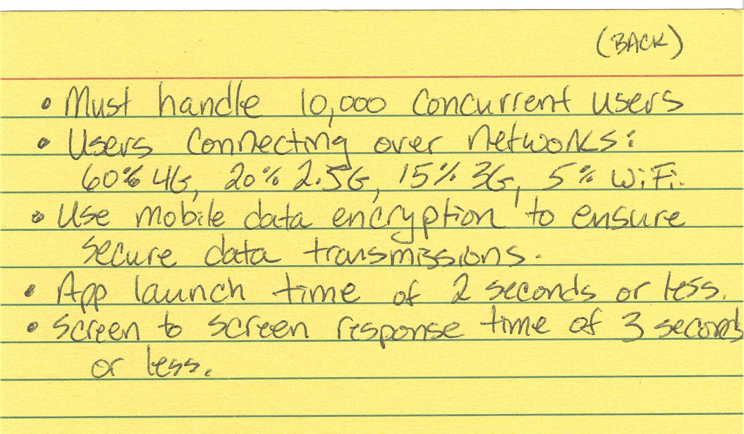

The back of the card reads:

- Must handle 10,000 concurrent users

- Users connecting over networks: 60% 4G, 20% 2.5 G, 15% 3G, 5% WiFi

- Use mobile data encryption to ensure secure data transmissions

- App launch time of 2 seconds or less

- Screen to screen response time of 3 seconds or less

By considering performance factors as this story is being created, all parties can consider the experience of users whose flights have been delayed for whatever reason and who now need to quickly make new plans. I won’t address all of the performance criteria on the back of this card. But I’ll zero in on one of them: “Must handle 10,000 concurrent users.”

Performance focus: Use cloud load testing to understand sudden bursts in usage

Getting to the level of 10,000 concurrent users is often a challenge for many organizations. If your typical performance scenario is 1,000 virtual users, then you should increase the transactions per second (TPS) to see how system performance will respond with 10,000 actual users.

I’m going to quote myself from an article I published in May, “Why you need cloud load testing, and how to find the right tools”:

Compared to traditional load testing, cloud load testing is incredibly simple. For the most part, running a cloud load test involves setting a few parameters or writing a simple script and then clicking the run button. Because the resources required to run these tests are all based in the cloud, there are no complex hardware stacks to manage or racks of servers to spin up. The hard work is all handled behind the scenes, over the web. Cloud load tests run quickly and can give you insight into the performance of your web-based application based on thousands of virtual users.

Cloud load testing—and the ability to quickly perform burst tests—offers an easy, inexpensive way to test performance under various load conditions and can help teams developing apps such as the airline flight-status app better serve their customers. To try this yourself, go to the article, where you will see links to trials of the three most common tools.

Example 3: Online banking info for deployed soldiers

My cousin 1st Lt. Aaron DeCapua is currently deployed with the US Army at Camp Buehring in Kuwait. This week, I had a chance to connect with him via Facebook Messenger for IM and Call. He was at his base in Kuwait, on a Wi-Fi network. Among the things we talked about was the difficulty soldiers have doing mobile banking in the field. “What has been your experience?” I asked. “What should developers and business folks take into consideration when designing apps for people in your situation?”

“Money is an international commodity,” he told me. “If two banks are competing, the app that can connect with a representative via cellular voice, data voice, or text, and have solid encryption—that app is the best, especially if it has VPN built in. But I have yet to see all those options in a banking app.” Then he told me he often had trouble simply seeing his checking account balance, so he had no idea whether his family had the money to pay the monthly bills on time.

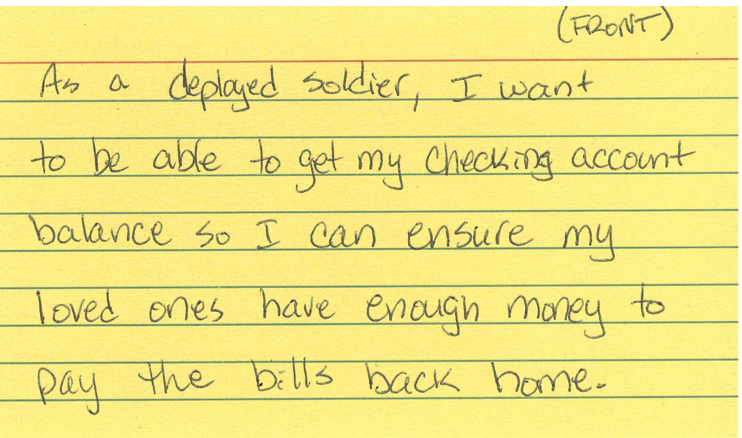

The front of the card for this user story probably looked something like this.

This user story reads: As a deployed soldier, I want to be able to get my checking account balance so I can ensure my loved ones have enough money to pay the bills back home.

At one point in our discussion, my cousin texted me: “No network = No communications = Cannot survive.” Well said, and we can see how important the network is. So, given everything I learned in our conversation, here are the kinds of performance requirements that should be taken into consideration on the back of the card.

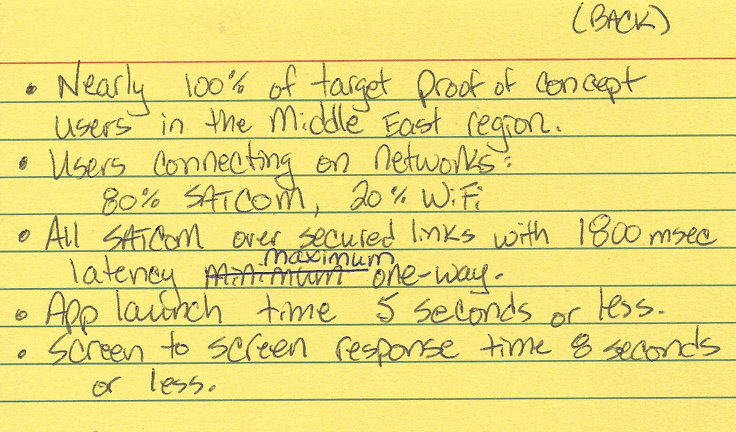

The back of the card reads:

- Nearly 100% of target proof of concept users in the Middle East region

- Users connecting on networks: 80% SATCOM, 20% WiFi

- All SATCOM over secured links with 1800 msec latency maximum one-way

- App launch time 5 seconds or less

- Screen to screen response time 8 seconds or less

There are always going to be glitches and exceptions, and perhaps the US Army is already working to ensure that soldiers get the performance they need. If not, I have a few suggestions.

Performance focus: Use network virtualization to understand various conditions

A critical capability developed for the Israeli military (by Shunra) lets organizations optimize their apps to perform well in all network conditions. Development teams can see how their website performs over five different network conditions. The system provides automated optimization recommendations, so teams can understand what to fix at a code level to increase performance speed by at least 40 percent.

For software development organizations, a range of network virtualization tools and services is available. Hewlett Packard Enterprise, which acquired Shunra two years ago, has offered a trial of this particular service for free, so this is an easy way for you to begin comparing technologies and learning what network virtualization can add to your own performance. This HPE service will provide you with a 20-plus-page results report, emailed to you in less than five minutes.

Performance as a way of life

Is it possible for performance to be built into the culture of the organization and considered a way of life for all individuals?

I think the specificity of the user story in agile methods has helped development teams stay on track as they complete sprints and work toward product delivery. I’ve seen this work. Before you know it, your extended team members and stakeholders start thinking like performance engineers, as people who work to establish effective performance engineering on their team, and across their entire business.

To learn more about effective performance engineering, check out the book recently published by O’Reilly at http://www.effectiveperformanceengineering.com now. For a limited time, you can get the book as a free PDF download, sponsored by HPE. For more information about my cousin 1st Lt. Aaron DeCapua and his experiences with high tech communications in Kuwait, check out my recent blog post which contains the full interview on which I based parts of this article.

Image credit: Flickr

Keep learning

Take a deep dive into the state of quality with TechBeacon's Guide. Plus: Download the free World Quality Report 2022-23.

Put performance engineering into practice with these top 10 performance engineering techniques that work.

Find to tools you need with TechBeacon's Buyer's Guide for Selecting Software Test Automation Tools.

Discover best practices for reducing software defects with TechBeacon's Guide.

- Take your testing career to the next level. TechBeacon's Careers Topic Center provides expert advice to prepare you for your next move.