Many organizations try to increase business agility by splitting apart existing software systems into smaller chunks, believing that this enables safer, more rapid changes. But when moving from a monolithic software system to more loosely coupled services, you must consider how the new architecture will affect the teams involved in building your software.

Without taking into account the team angle, you risk splitting the monolith in the wrong places, or even creating a complex, coupled mess of unmaintainable code—what’s know as a “distributed monolith.”

When I help organizations decouple their large systems into smaller segments, I take an approach to splitting up monoliths that starts with the teams, rather than the technology. Here are some of the common patterns and techniques I have adopted that I hope you’ll find useful. But first let’s step back a bit.

What is a monolith?

The word monolith literally means “single stone” in Greek—a big slab of stuff that’s heavy and difficult to work with. In the software world, there are many different kinds of monoliths, and each requires a different approach to break it apart. Here are six of the more common types:

- Application monolith: A single large application, with many dependencies and responsibilities, that possibly exposes many services or different user journeys.

- Joined at the database: Several applications or services, all coupled to the same database schema, making them difficult to change.

- Monolithic builds: One gigantic continuous integration (CI) build that’s done just to get a new version of any component.

- Monolithic releases: Smaller components bundled together into a “release.”

- Monolithic model: Attempted language and model (representation) consistency across many different contexts. “Everyone can work on anything,” leading to inconsistent or leaky domain models.

- Monolithic thinking: One-size-fits-all thinking for teams that leads to unnecessary restrictions on technology and implementation approaches between teams.

This is not an exhaustive list; you may have other kinds of monoliths (or too-tight coupling). So before you start splitting your monolith, identify which kind you’re dealing with, then invest time in good decoupling.

Some organizations have taken the time and effort to split up an application monolith into microservices, only to produce a monolithic release farther down the deployment pipeline, wasting an opportunity to move faster and safer. To avoid creating downstream monoliths, always be on the lookout for the different kinds of monolithic software listed above.

Match your organizational architecture with your software architecture

Several studies have confirmed the core message of Conway’s Law that “Any organization that designs a system ... will produce a design whose structure is a copy of the organization's communication structure.” There are many subtleties to this in practice, but it boils down to this: If the intercommunication between teams does not reflect the actual or intended communication between software components, the software will be difficult to build and operate.

You can use “Reverse Conway”—changing the team structure to match the required system architecture—together with techniques such as domain-driven design (DDD) and code forensics, to reshape team responsibilities to align with the software architecture you need to produce in order to clarify boundaries and improve the development and operation of your systems. But how do you know where to split the monolith safely?

Use code forensics to help identify hidden coupling

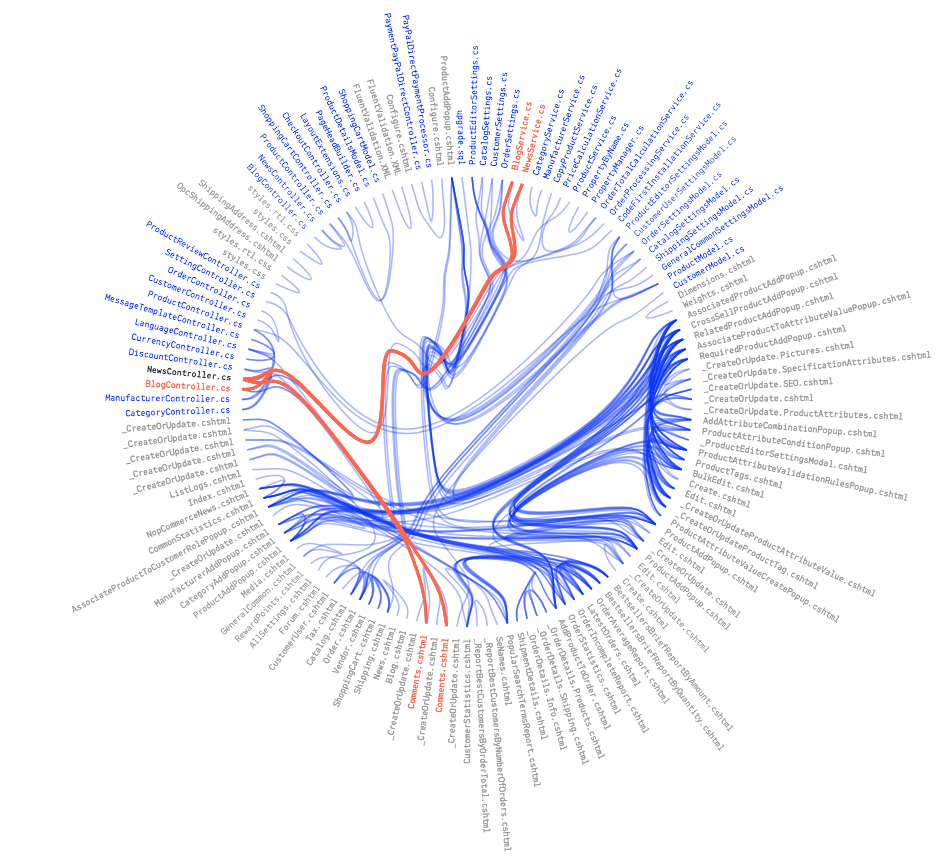

By splitting up your monolithic code into smaller services, you may be able to make responsibility boundaries clearer, although that doesn’t happen automatically. You can use tools such as Codescene and Code Maat to analyze your codebase and detect not only standard code metrics, such as cyclomatic complexity and static coupling, but also temporal coupling—places in your codebase where apparently unrelated files tend to change at the same time, as shown in the repository history.

Temporal coupling analysis by Codescene (with permission from Adam Tornhill)

In his book Your Code as a Crime Scene, Codescene and Code Maat creator Adam Tornhill explained how to use police forensics techniques to analyze and understand the evolution of codebases. For example, he said that "information-poor abstract names are magnets for extra [unwanted] responsibilities." How you name things really does matter, since badly chosen names tend to accrete extra code, making your software harder to work with. Tornhill’s new book, Software Design X-Rays: Fix Technical Debt with Behavioral Code Analysis, takes these ideas even further. I highly recommend both titles.

Cognitive load for teams determines the size of subsystems

For safe monolith splitting, it is crucial to consider the cognitive load on each team that works with your software. Cognitive load, as defined by psychologist John Sweller, is “the total amount of mental effort being used in the working memory.” So cognitive load is important in activities that require mental agility—such as software development.

The maximum effective size for a software team is about nine people, and the maximum cognitive load for any given team is the combined and amalgamated capacity of all team members. One team’s maximum cognitive load will differ from others. For example, a team of experienced engineers will have a higher cognitive load than will a team of less experienced people. But there is still a maximum effective size for every subsystem, and that is smaller than many software monoliths.

That means you should limit the size of each subsystem to be no greater than the cognitive load of the team that’s building it. That’s right: The size and shape of your software should be determined by the maximum cognitive load of your teams. By starting with the needs of each team, you can infer a software and systems architecture that best suits your team members.

A recipe for splitting monolithic software

Now that you have taken Conway’s Law into account, used Code Maat or a similar tool to analyze your codebase for temporal coupling, and limited the maximum size of each subsystem to match each team’s cognitive load, you’re ready to begin splitting your own monolith. But first let’s validate a few assumptions.

Are you certain that your existing monolith works as expected? Are the internal responsibilities between packages/namespaces/modules neat and well-defined? What about subtle bugs that might become serious when you move from in-process calls to cross-machine HTTP calls?

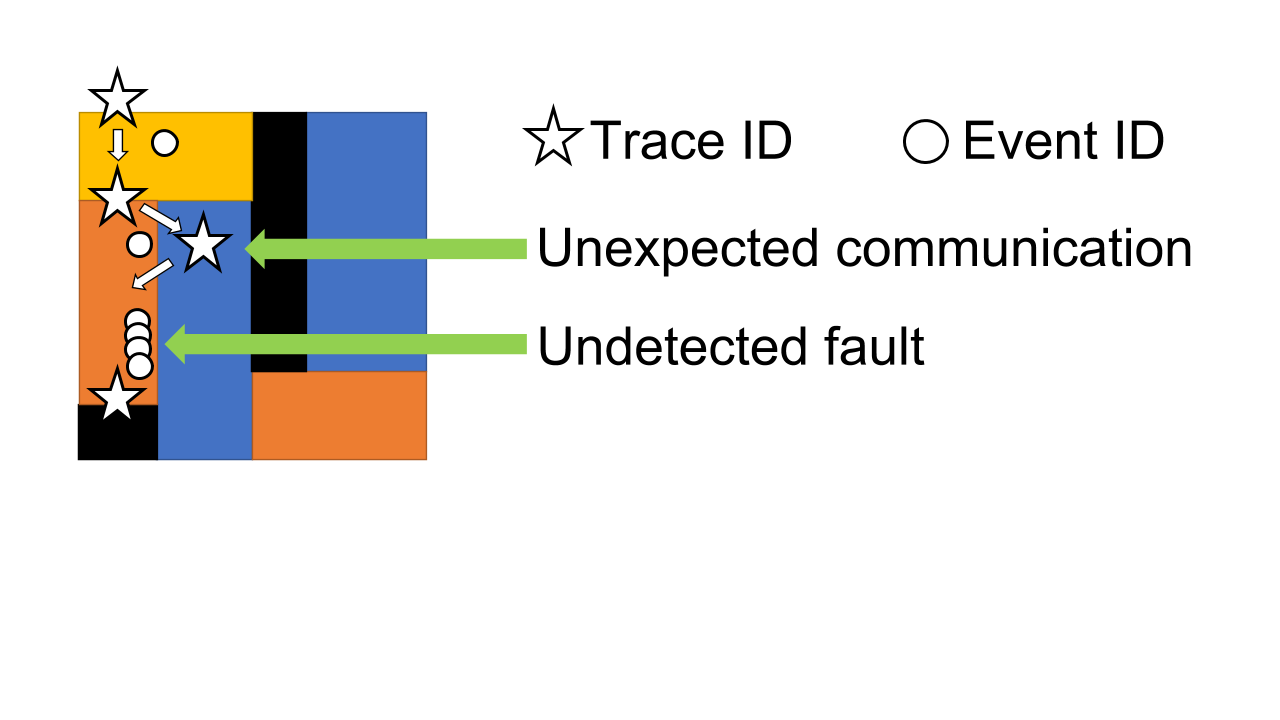

To answer these questions, you need to instrument the code using modern logging, tracing, and metrics techniques to produce rich data about exactly how the software works at runtime. Specifically, you can use event ID techniques in your logging to detect unexpected actions and states reached in the code, along with call tracing. You can use tools such as OpenTracing or Zipkin, and/or application performance monitoring (APM) tools to detect the exact code path used during a request or execution path.

These techniques will probably highlight areas where your subsystems are communicating unexpectedly, or they could find undetected fault conditions. Fix those problems before splitting your code. Otherwise, the problems of these extra calls and errors will be exacerbated when you move to a distributed microservices model.

Detect unexpected communications and faults in a monolith using logging and tracing.Once you’ve fixed any unwanted calls and errors, you can begin to align a slice of the monolith to what is referred to in DDD as a “business domain bounded context”—a segment of the functionality within which the terminology is consistent and that has a single responsibility, such as taking payments or rendering a document. Where possible, split by DDD bounded context.

But sometimes you’ll need an alternative split line (what I call a “fracture plane”), such as splitting by technology or risk. For example, to meet regulatory compliance (such as PCI-DSS), you may need to split along a data boundary. Similarly, to help achieve performance isolation—for a high-volume ticket-booking system, for example—you may need to split out technical aspects of the booking flow.

You can then split off the new team-aligned subsystems or services piece by piece, each time looking at the rich log data and metrics data you can use to validate your assumptions about how the software is working before and after you split out the code. You should:

- Instrument the monolith using logging, tracing, and metrics.

- Understand the data flows and fault responses and fix any problems.

- Align teams to available segments based on suitable fracture planes.

- Split off segments one by one, using logging and metrics to validate changes.

After you’ve split a segment from the monolith, ensure that the new segment has independence in every area, including a separate version control repository, a build and deployment pipeline, and probably either separate servers (if you’re using virtual machines) or pods (when using Kubernetes). The new segment is independent from the monolith and other segments, enabling the team responsible for each segment to work independently.

Make your move: How to get started

Moving from monolithic software to smaller, decoupled services helps you release more rapidly and safely. But to avoid creating a complex, distributed mess, first consider how teams will build and run the new services. Conway’s Law warns that communications between teams will drive the new, decoupled architecture, and your new services should not be larger than the cognitive load of each team.

First, identify what kind of monolith you’re dealing with. Then, before you split the code, use a code forensics tool such as Code Maat to identify temporal coupling. Use modern logging, tracing, and metrics tools that can identify unexpected calls and faults.

Only then can you identify suitable fracture planes within the code that can act as sensible split boundaries. Finally, split off segments one by one, validating system behavior with logging and metrics at each stage.

For more on monoliths and teams, watch my presentation from the Velocity Conference EU 2016. Thanks to Adam Tornhill for his input on code forensics, Daniel Bryant for early feedback on this material, and Chris O’Dell for additional insights.

Keep learning

Take a deep dive into the state of quality with TechBeacon's Guide. Plus: Download the free World Quality Report 2022-23.

Put performance engineering into practice with these top 10 performance engineering techniques that work.

Find to tools you need with TechBeacon's Buyer's Guide for Selecting Software Test Automation Tools.

Discover best practices for reducing software defects with TechBeacon's Guide.

- Take your testing career to the next level. TechBeacon's Careers Topic Center provides expert advice to prepare you for your next move.