Developers and technology leaders share a growing misconception that containers can somehow automagically create and turbocharge a continuous delivery pipeline. The contention seems to be that containers enable new features to be rolled out and integrated quickly, providing a predictable and reusable environment, supporting fast feedback, and therefore enabling continuous delivery.

As with many things container-related, the assumptions are not entirely accurate. Containers do not magically speed up an existing continuous delivery pipeline, but they can help if used appropriately. Because continuous delivery is the goal, I will first describe the essential elements of continuous delivery, then describe four common misconceptions about containers as a way to get there.

What is continuous delivery?

British software developer Martin Fowler describes continuous delivery as a software development approach whereby software is built so that it can be released to production at any time. To paraphrase his description, continuous delivery happens when your software is deployable at any time, when you can easily push any version of your software on demand to any environment, and when you or anybody else in the development team can get immediate, automated feedback on the impact of changes to the software from a production readiness standpoint.

How continuous delivery works

Continuous delivery, as Fowler notes, involves continuous integration. That means having a pipeline for integrating the work done by your software development team members on a daily basis. It means being able to verify each newly integrated piece of code for errors as soon as possible by running automated tests on them.

Continuous delivery, says Fowler, involves pushing executables into environments that are increasingly production-like, so you can make sure you are not breaking anything every time you integrate new software.

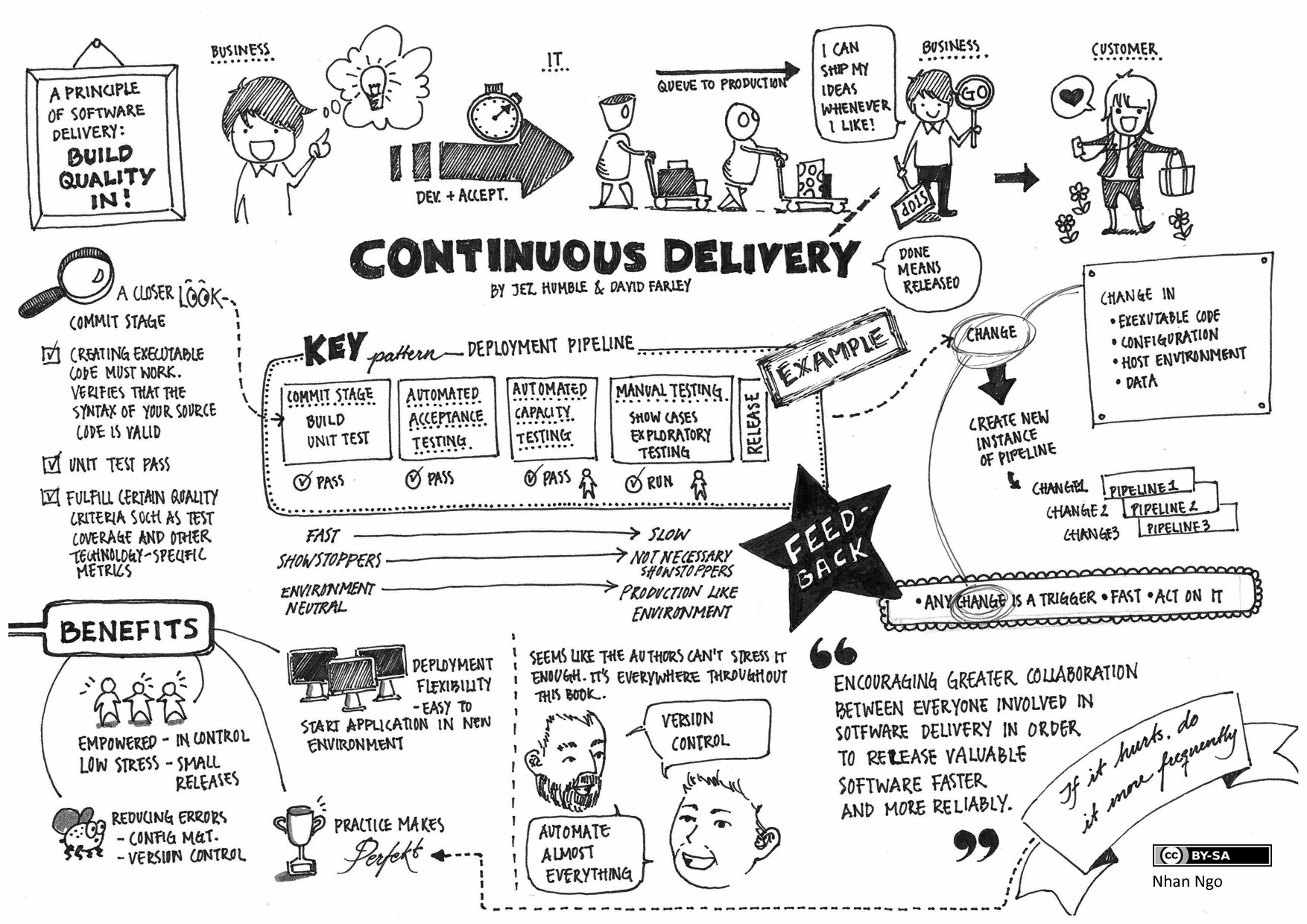

The drawing below, from Jez Humble and David Farley, illustrates how continuous delivery works and interacts throughout a complex bigger picture.

The value of continuous delivery

If implemented correctly, a continuous delivery model can reduce risk, because software changes are made in an incremental fashion. For the same reason, continuous delivery makes it easier to roll back changes or improve on them in response to user feedback. And because the changes are deployed into a production environment, progress is easier to track and is not dependent on someone else’s definition of "done" regarding a given project, says Fowler.

A maturity model for continuous delivery

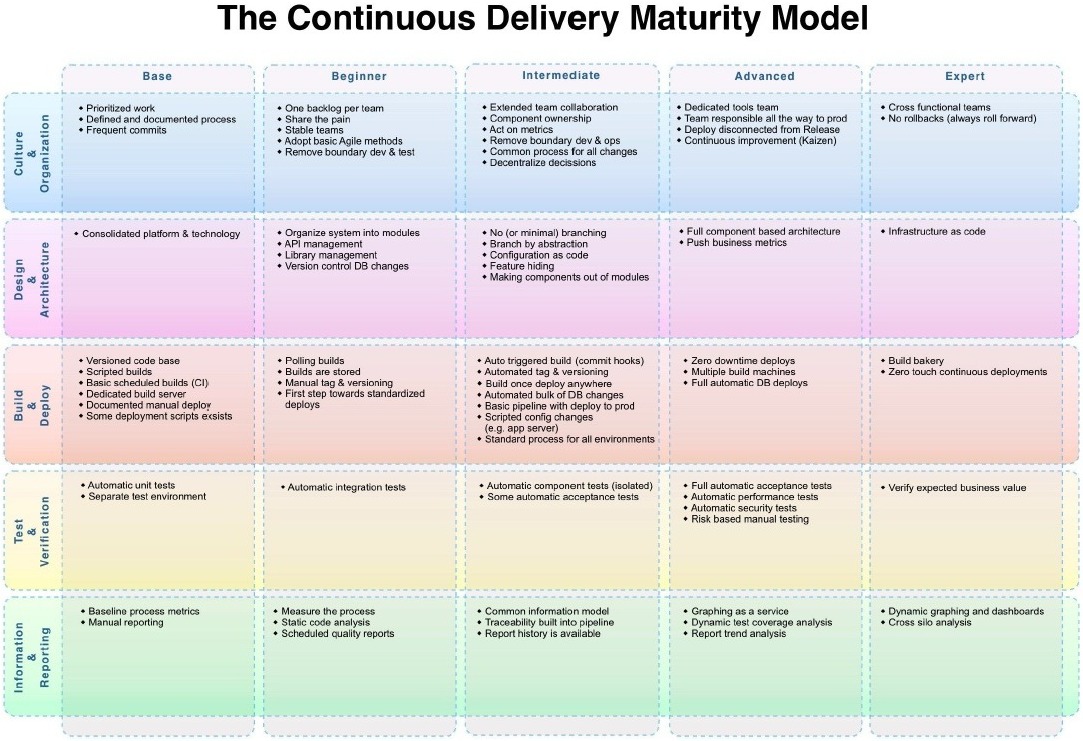

For any organization of reasonable size, implementing a continuous delivery pipeline can be an overwhelming challenge. This notion is supported by software architects Andreas Rehn, Tobias Palmborg, and Patrik Bostrom, who in 2013 proposed a maturity model for continuous delivery. Their maturity model is shown below; you can go to their web page for a more readable version.

The model is designed to lend some formal structure to continuous delivery implementation, given the long and often cumbersome process of developing and delivering software.

The intersection of containers and continuous delivery

It's important to understand that continuous delivery involves a lot more than just using containers to push out code changes more quickly than before.

Containers allow developers to isolate code to a single self-contained function, which certainly makes it easier to modify and update the code. They also make it easier for enterprises to break up a large application development project among multiple smaller, agile teams, so the focus is on concurrent releases by multiple teams.

Vendors of container technology have fueled some of the excitement around the applicability of containers in a continuous delivery pipeline. Docker says that organizations that have integrated its container technology into their continuous integration pipeline have benefited from accelerated system provisioning, faster job times, and an increase in the volume of jobs run.

How containers can complicate continuous delivery

It is important to remember, however, that containers do not mitigate any of the requirements of continuous delivery. If anything, they only complicate them. Here are four misconceptions about containers that you and your continuous delivery team should be aware of.

1. Myth: With containers, you do not need to worry about configuration or construction details

Containers, as described in this blog post by Iron.io and ClusterHQ, are rooted in a world where developers run their applications on a single system. That’s very different from typical production apps at medium to large enterprises that run on multiple computers. As the blog points out, containerized application development in such an environment requires attention to a number of things, including composition, scheduling, networking, and storage.

You need to know how to build an application or service with multiple interconnected containers. Then you need to be able to schedule them to run across multiple machines and figure out a way for them to communicate with one another from across the multiple host systems.

Implementing all of this involves specific and separate challenges that have a direct impact on your ability to do continuous delivery. Make sure you know what they are and what you need to do to mitigate the issue.

2. Myth: Containers solve composite app complexities and concurrent development challenges

Contrary to perception, complexity is another big issue with containerized development, especially because it is still relatively new, and proven practices are still evolving. As I have noted previously, medium and large organizations can have 30 or more teams working concurrently on a project and code base.

Having multiple teams working concurrently on code makes it harder for development teams to assess the application as a whole and understand the impact of code changes, especially given the dependencies that may exist between containers. It is important to know how containers affect your ability to implement a continuous deployment pipeline, because that pipeline is critical to ensuring that issues that crop up during the development, integration, testing, and acceptance stages don’t end up in production.

3. Myth: Since everything is built-in and portable, we don't need to worry about network connections and dependencies

Networks and the connected nature of end users—whether we're talking about the Internet of Things, mobile, automotive, or other networks we can point to—are an increasingly important factor in the quality and performance of applications.

The application architectures in nearly all organizations today have been built with dependencies across the stack, including internal, external, and shared dependencies. As a result, continuously changing versions and deployments need to be carefully managed. Intricately intertwined versions are not always deployed and tested across all combinations of supported end-user scenarios; the ever-changing landscape of application and infrastructure architectures is not made less complex with container technologies.

You need to understand how containers impact your ability to do continuous integration and delivery, especially in multi-team, concurrent application development. When everything is so intertwined, how do you verify that new code can be integrated and tested in a manner consistent with continuous delivery practices? What is the impact to your business when these dependencies are missed and your end user is unable to use your products and services?

4. Myth: With containers, you're automatically using production-sized data sets, so you've eliminated production risks

One of Fowler’s core requirements for continuous delivery is that developers be able to push their executables out to production or production-like systems in order to assess the impact. This requirement highlights limitations of how container technologies are being implemented and leveraged today, where recreating large scale data during development and test simply is not a common practice.

A goal of continuous integration and continuous delivery is to continuously test the core permutations supporting the end users for your products and services, using like-sized data and environments to confirm performance, security, functionality, usability, scalability, and more. The testing should verify continuous deployment into target environments throughout the cycle, increasing the speed and quality in an automated manner throughout the lifecycle.

You need to understand what production-size data sets do to your ability to ensure that code changes aren't breaking the production environment. Can you adopt container technologies that will enable continuous delivery practices, so you can deliver with speed and quality in production? How large are your development-stage datasets and environments compared to production?

Keep your eyes wide open

Containers offer a way to speed application delivery and increase application portability. They hold a lot of potential to transform application development practices. But it is important to go into this with your eyes wide open and not get carried away by the hype surrounding the technology.

- If continuous delivery is your goal and using containers is the way you want to enable it, first understand what continuous delivery is really all about and figure out where in the maturity model (see above) you fit. That model defines five levels of maturity, from beginner to expert.

- Take the time to understand the implications of using container technologies, both capabilities and known limitations. You don’t just download a container technology, have all your problems solved, and suddenly find yourself up and running with robust continuous delivery.

- Define how your continuous delivery model is working and how you want to evolve it over time. Drive your overall bigger technology strategy, leveraging both container and continuous delivery and doing so in a planned a coordinated activity, so you'll get closer to the desired results.

- You'll achieve incremental value by starting small and seeing what works. As you begin to scale, be sure to gather and incorporate feedback from your continuous delivery cycles.

What do you think? Have containers breathed new life into your development and delivery schedules, or are you finding challenges at scale? At little of both? Let us hear from you in our comments section below.

Image credit: Flickr

Keep learning

Take a deep dive into the state of quality with TechBeacon's Guide. Plus: Download the free World Quality Report 2022-23.

Put performance engineering into practice with these top 10 performance engineering techniques that work.

Find to tools you need with TechBeacon's Buyer's Guide for Selecting Software Test Automation Tools.

Discover best practices for reducing software defects with TechBeacon's Guide.

- Take your testing career to the next level. TechBeacon's Careers Topic Center provides expert advice to prepare you for your next move.