Have you ever had some idea catch fire, then spread at your workplace until the whole atmosphere changed? Maybe it was some new initiative or an improvement to a nonworking process that sparked change in your organization forever.

Have you ever had a similar experience with a test automation project that you worked on? Maybe the test automation was so successful that everyone in the organization started using it in their pipelines, to create higher-quality software, release products faster, and generate more revenue for the company.

For the majority of us, I can confidently assume that this has never been the case.

I spent years teaching test automation to over 50,000 students in over 100 countries. And since 2018, I have been working as a solution architect. This position allows me to work with dozens of clients and hundreds of automation engineers every year. And the main thing that I keep seeing over and over is that test automation is insanely hard.

Automation is tough to get right and extremely easy to get wrong. Don't believe me? Here are some numbers from Sauce Labs, which check test-pass rates, based on 2 billion tests executed all over the world in its Continuous Testing Cloud. It found that fewer than 20% of us can execute a test that will pass more than 90% of the time. Even scarier, the largest cohort had pass rates of between 50% and 75%.

There are hundreds of mistakes that can derail a test automation project and waste millions of dollars for companies. However, here are three mistakes that keep creeping in with most engineers that I work with—and which are guaranteed to kill your automation project.

1. Not keeping things simple

The best test automation tries to follow two essential rules: Keep It Simple Stupid (KISS) and YAGNI (You Ain't Gonna Need It). These are fundamental principles that should be used for hundreds of decisions per day. For example, "Am I going to use the page object pattern for my test automation"? KISS, and absolutely.

There's a reason why after 15 years this pattern continues to be the default standard in test automation. Why are we going to reinvent the wheel? Sound logical?

Then why are we doing the opposite? Just in the last week, I came across a "page object" that had only locators and a name that didn't make any sense. All the methods of all the page objects lived in a single file for "reusability." Even scarier was the "page object" itself, which is 10,500 lines of code and mainly manipulates query string parameters.

Another common problem that I deal with every day is whether a team will use behavior-driven development (BDD) for its test automation. The first question to ask is if it's really simple and whether you want to learn a new tool, syntax, and rules.

That sounds complicated and like more work. Hence, it doesn't follow KISS.

Second, is the purpose of BDD automation to improve collaboration between devs, QA, and business analysts, or is the purpose to write easy-to-read sentences in your test automation? If it's the latter, then You Ain't Gonna Need It, pal.

As you are writing your test automation, you should be thinking of these two rules with every decision you make, from high-level ones about architecture to low-level ones that tell you if you should wrap that line of code into a separate method.

If you don't, then every line of code is a move in the wrong direction. At some point, your code will become so rotten and unmaintainable that you stop doing your job of creating higher-quality software. Rather, you focus on fixing locators, synchronizations, and finding the most advanced usages of XPath.

2. Having non-atomic tests

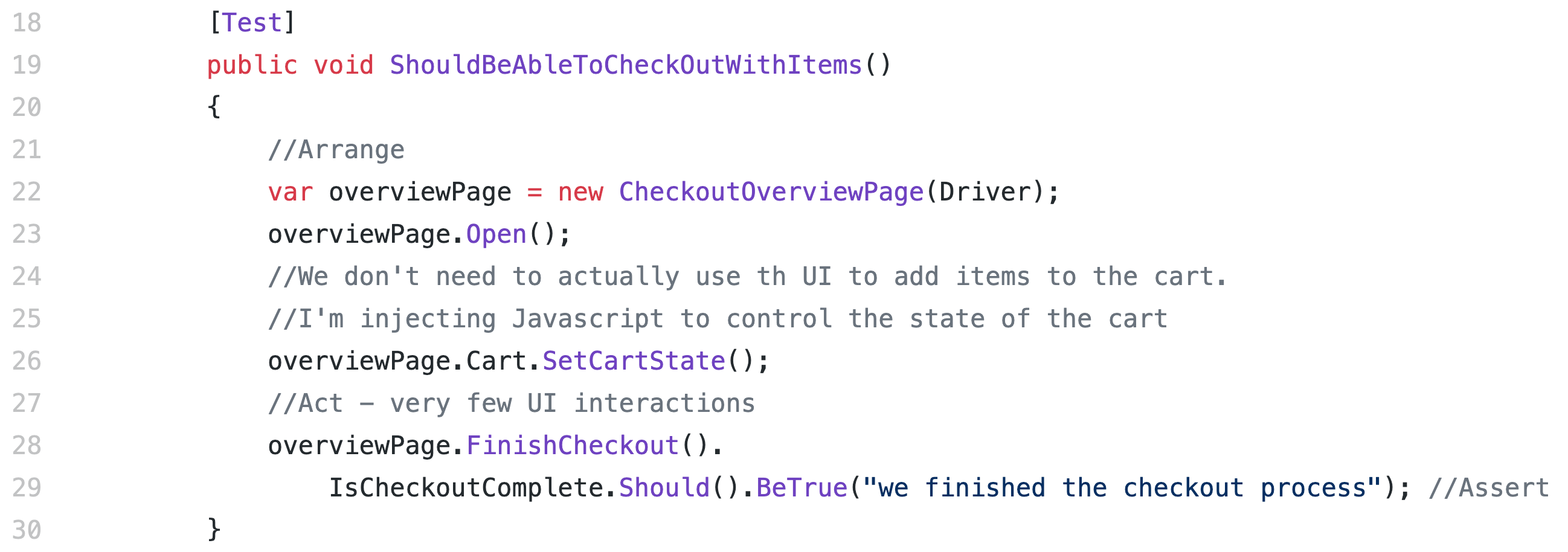

An atomic test checks only one function. The example below uses the UI to validate that a user can successfully check out:

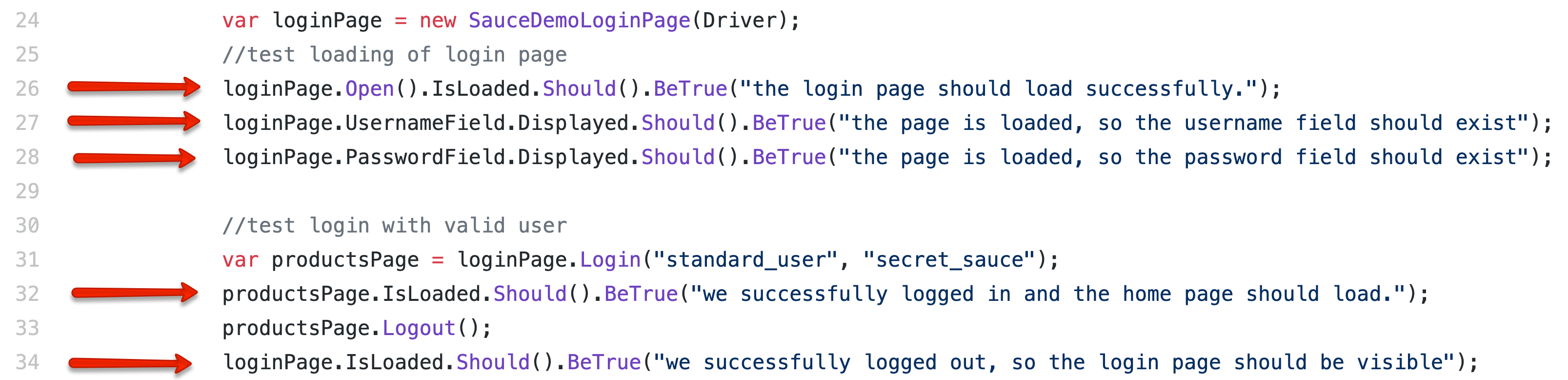

This test has a single assertion, so it's most likely atomic. On the other hand, the test below is not atomic.

You can tell that it's testing the load of the page, that some fields are present, that a user can log in, and that a user can log out. The multiple assertions are a pretty dead giveaway as well.

Interestingly, the second example above is not even that bad. I see worse than that every day in 90% of my interactions. (If you want to learn how to break up your tests to make them atomic, that topic is covered in depth here.)

The further away you move from an atomic test, the less stability, efficiency, and determinism you get from your actual GUI automation. (A deterministic system is one in which no randomness is involved in the development of future states of the system.)

Every web UI interaction is a chance for something to go wrong. Invalid element locator, improper synchronization point, or a new page update are just some of the web interactions that can cause a failure. Hence, the more of these interactions you have in a test, the less stable the test will become.

As a result, your automated test will become less efficient, since you have to spend drastically more time debugging false positives. Think about an automated test that executes in 20 minutes versus 20 seconds. If the first test fails on minute 19, that's 19 minutes that you have to wait before being able to debug the failure. If the failure is anything harder than a locator change, you could spend an hour figuring out why it failed.

This leads to the final point of how the least atomic tests are also the ones that are the least deterministic, meaning that they provide the worst insight into the failure of the system. Your GUI tests are failing for all sorts of random reasons, excluding real bugs. And you spend your time chasing broken element locators, complicated element locators, and invalid synchronizations, as opposed to helping the company deliver higher-quality software in a shorter time.

This kind of inefficiency permeates an automation project like a virus. In the end, the project is left with a team of automation engineers at a cost of $1 million per year, who are mostly spending their time fixing bugs in the automation code.

3. Trying to convert manual testing to automation

Any project that attempts to convert the manual testing suite to an automated testing suite is guaranteed to fail. This idea and process are fundamentally flawed. First, you don't code test automation in the same way that you execute manual testing. Second, not all manual testing should be automated, especially at the GUI level.

A manual test has one thing that test automation does not: intelligence. A manual end-to-end flow test for 30 minutes may discover a ton of different bugs in the application because the tester can spot inconsistent behaviors intuitively.

Automation, on the other hand, has never, and currently cannot, find any bugs other than the validation that the automation was told to perform by the code. The entire web page may not be rendering, but if you told your UI automation to check that the URL displays the correct string, then that automation will continue to pass.

However, the solution is not to add all validations to a single test (see mistake 2, above). The solution is to correctly apply mocking and state management to get your application into the desired configuration. Afterward, validate that your GUI application behaves as expected.

In this example, the SetCartState() on line 26 injects JavaScript into the web application so that you can set the desired state of the app, and inject a user and some items into the cart. Ultimately, this allows you to validate that the user can correctly perform the checkout process through the GUI.

Attempting to do anything more through the web UI will lead away from having an atomic test and will lead to all of the problems mentioned in mistake 2. Hence, coding test automation is a fundamentally different process than executing manual test cases.

Don't over-automate

Furthermore, don't try to automate all your manual tests, especially at the GUI level. This is mainly because there are far more efficient places to do test automation than a web UI—for example, the unit and integration layers.

It's hard to create a general rule, since many applications are so different. But for the most part, you can gauge if it's the correct level of GUI automation coverage if:

- All GUI tests execute in under 30 seconds on your local resources.

- All GUI tests have 99.5% reliability.

- The whole suite can execute in under 10 minutes.

If any of these requirements deviate in a negative direction, then you probably have too much GUI automation. It's time to stop and make sure that you're adding test automation at the correct system level.

The right solution to this problem is to let the automation engineer determine the appropriate tests to perform at the UI and API layers. Further, the engineer can work with developers to encourage more unit testing above all else.

At some point, if GUI automation needs to stop because it doesn't make sense to automate more, that is okay. You can now work toward adding more automation at more efficient layers of your system.

Trying to convert a manual suite into an automated suite is a fundamentally flawed process that is guaranteed to waste the resources of your employer. I have never seen it work in my entire career, and I help companies battle this fire every single day.

Stay free of fails

GUI test automation is insanely hard, as proven by the sad test execution metrics from around the globe. These three mistakes are behind those failures. Make even one of them and your test automation project is guaranteed to fail. Make all three and even God himself won't be able to untangle the mess.

Have you seen these mistakes destroy automation projects? Let me know in the comments below; I'd love to hear your story.

Keep learning

Take a deep dive into the state of quality with TechBeacon's Guide. Plus: Download the free World Quality Report 2022-23.

Put performance engineering into practice with these top 10 performance engineering techniques that work.

Find to tools you need with TechBeacon's Buyer's Guide for Selecting Software Test Automation Tools.

Discover best practices for reducing software defects with TechBeacon's Guide.

- Take your testing career to the next level. TechBeacon's Careers Topic Center provides expert advice to prepare you for your next move.